MySQL Enterprise Monitor is a tool to watch and analyze multiple MySQL environments from a single web based dashboard. More information is available on the MySQL homepage. Each MySQL instance is monitored by a small agent that connects to the MySQL instance and reads statistics that is sent to the MySQL Enterprise Monitor (MEM) Server.

That setup is very easy. But if the MySQL server is in a cluster failover configuration, there are some things to consider when installing the MEM agent:What do you want?

Do you want to have two entries in the MEM dashboard for both physical servers?

This is good because:

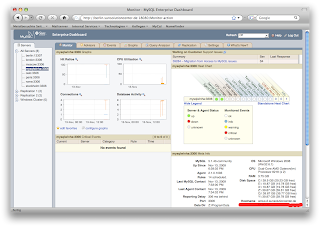

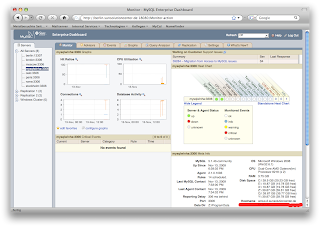

Do you want to see only one entry in the MEM dashboard that displays the data no matter, which physical server is running the instance at the moment?

This is good because:

By the way: I recommend this way, as the disadvantages are really small and the installation is even a little easier. Both procedures work for all common HA frameworks like SunCluster, OpenHA Cluster, Linux Heartbeat, Microsoft Windows Cluster.

- You can monitor them separately, you can define different rules for both servers in case they offer different capabilities.

- You can immediately see, which physical server runs the MySQL instance. The other entry will always report either "MySQL server down" or "MEM agent not reachable".

- You cannot watch the data if a failover occurred. E.g. you can only see graphs for a specific physical host.

- You get red alarms because the agent on the passive node cannot reach the MySQL instance. This alarm is harmless. But it will train you to ignore red alarms. Not good.

Do you want to see only one entry in the MEM dashboard that displays the data no matter, which physical server is running the instance at the moment?

This is good because:

- You have continuous data even if a failover occurred during the period. E.g. you can watch the cache hit rate graph of your MySQL server and you will see only a dip where the failover took place.

- You will not see false events like "MySQL server is down on the passive node". Of course it is down. That's why it is called the passive node ;-)

- It's not so easy to see which physical server currently runs the MySQL instance: In the meta info on the dashboard screen you see the physical hostname that runs the MySQL instance.

- You need to apply the same rules to both physical servers. This may be a problem if they have different capabilities, e.g. if the backup node is smaller.

By the way: I recommend this way, as the disadvantages are really small and the installation is even a little easier. Both procedures work for all common HA frameworks like SunCluster, OpenHA Cluster, Linux Heartbeat, Microsoft Windows Cluster.